🚀 Check out this trending post from Culture | The Guardian 📖

📂 Category: OpenAI,Bryan Cranston,Technology,Television & radio,Culture,Artificial intelligence (AI)

💡 Main takeaway:

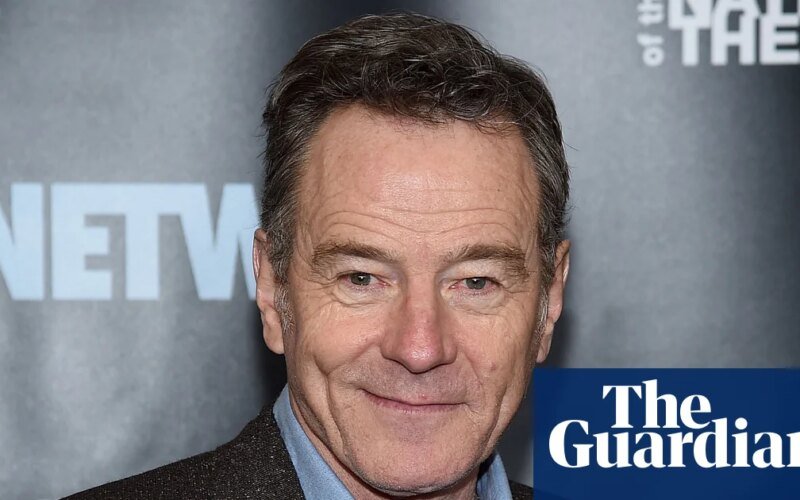

Bryan Cranston said he was “grateful” to OpenAI for cracking down on deepfakes of himself on the company’s generative AI video platform Sora 2, after users were able to create his voice and likeness without his consent.

The Breaking Bad star has reached out to actors union Sag-Aftra to raise his concerns after Sora 2 users were able to create his image during the video app’s recent launch phase. On October 11, the Los Angeles Times described a video for Sora 2 in which “Michael Jackson takes a selfie video with an image of Breaking Bad star Bryan Cranston.”

Living people must ostensibly give their consent, or opt-in, to appear in Sora 2, with OpenAI stating since its launch that it is taking “measures to prevent the depiction of public figures” and that it has “guardrails intended to ensure that audio and video are used with your consent.”

But when Sora 2 launched, several publications including the Wall Street Journal, Hollywood Reporter, and LA Times reported widespread outrage in Hollywood after OpenAI allegedly told several talent agencies and studios that if they didn’t want their clients or copyrighted materials copied on Sora 2, they would have to Unsubscribe – Instead of subscribing.

OpenAI disputed these reports, telling the Los Angeles Times that it had always intended to give public figures control over how their images were used.

On Monday, Cranston issued a statement via Sag-Aftra, thanking OpenAI for “improving its guardrails” to prevent users from creating his image again.

“I felt deeply concerned not only for myself, but for all artists whose work and identity could be misused in this way,” Cranston said. “I am grateful to OpenAI for their policy and for improving their guardrails, and I hope that they and all companies involved in this work will respect our personal and professional right to manage the frequency of our voices and example.”

Two of Hollywood’s biggest agencies, Creative Artists Agency (CAA) and United Talent Agency (UTA) – which represents Cranston – have raised repeated alarms about the potential risks of Sora 2 and other generative AI platforms to their clients and careers.

But on Monday, UTA and CAA co-signed a statement with OpenAI, Sag-Aftra and the talent agents’ union, the Association of Talent Agents, stating that what happened to Cranston was wrong, and that they would all work together to protect “actors’ right to determine how and whether they can be emulated.”

“While it has been OpenAI’s policy from the beginning to require opt-in use of voice and likeness, OpenAI laments these unintended generations. “OpenAI has reinforced the guardrails around repeating voice and likeness when individuals do not opt-in,” the statement read.

Actor Sean Astin, the new president of Sag-Aftra, warned that Cranston is “one of countless artists whose voice and those like them are at risk of widespread misappropriation through copying technology.”

“Brian has done the right thing by reaching out to his union and his professional representatives to address the matter. This particular case has a positive resolution,” Astin said. “I’m thrilled that OpenAI has committed to using an opt-in protocol, where all artists have the ability to choose whether they want to participate in exploiting their voice and likeness using AI.”

“Simply put, opt-in protocols are the only way to do business and the No-Counterfeiting Act will make us safer,” he added, referring to the No-Counterfeiting Act currently being considered by Congress, which seeks to ban the production and distribution of AI-generated replicas of any individual without their consent.

OpenAI publicly supports the No FAKES Act, with CEO Sam Altman saying the company is “deeply committed to protecting performers from misappropriation of their voices and likenesses.”

Sora 2 allows users to create “historical figures,” broadly defined as anyone famous or dead. However, OpenAI recently agreed to allow representatives of “recently deceased” public figures to request that their images be banned from Sora 2.

Earlier this month, OpenAI announced that it had “worked together” with the estate of Martin Luther King Jr. and, at their request, temporarily paused the ability to depict King in Sora 2 as the company “strengthens guardrails for historical figures.”

Recently, Zelda Williams, the daughter of the late actor Robin Williams, implored people to “please stop” sending her father AI-powered videos, while Kelly Carlin, the daughter of the late comedian George Carlin, described her father’s AI-powered videos as “overwhelming and frustrating.”

Legal experts have speculated that generative AI platforms allow dead historical figures to be used to test what is permissible under the law.

Share your opinion below! {What do you think?|Share your opinion below!|Tell us your thoughts in comments!}

#️⃣ #Bryan #Cranston #OpenAI #cracking #Sora #deepfakes #OpenAI