🚀 Read this awesome post from Hacker News 📖

📂 Category:

💡 Key idea:

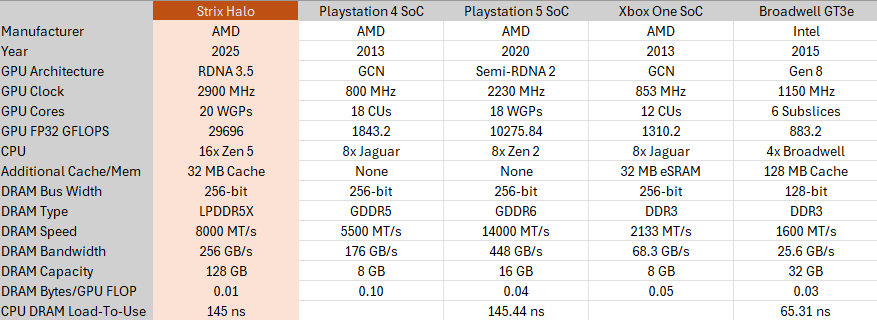

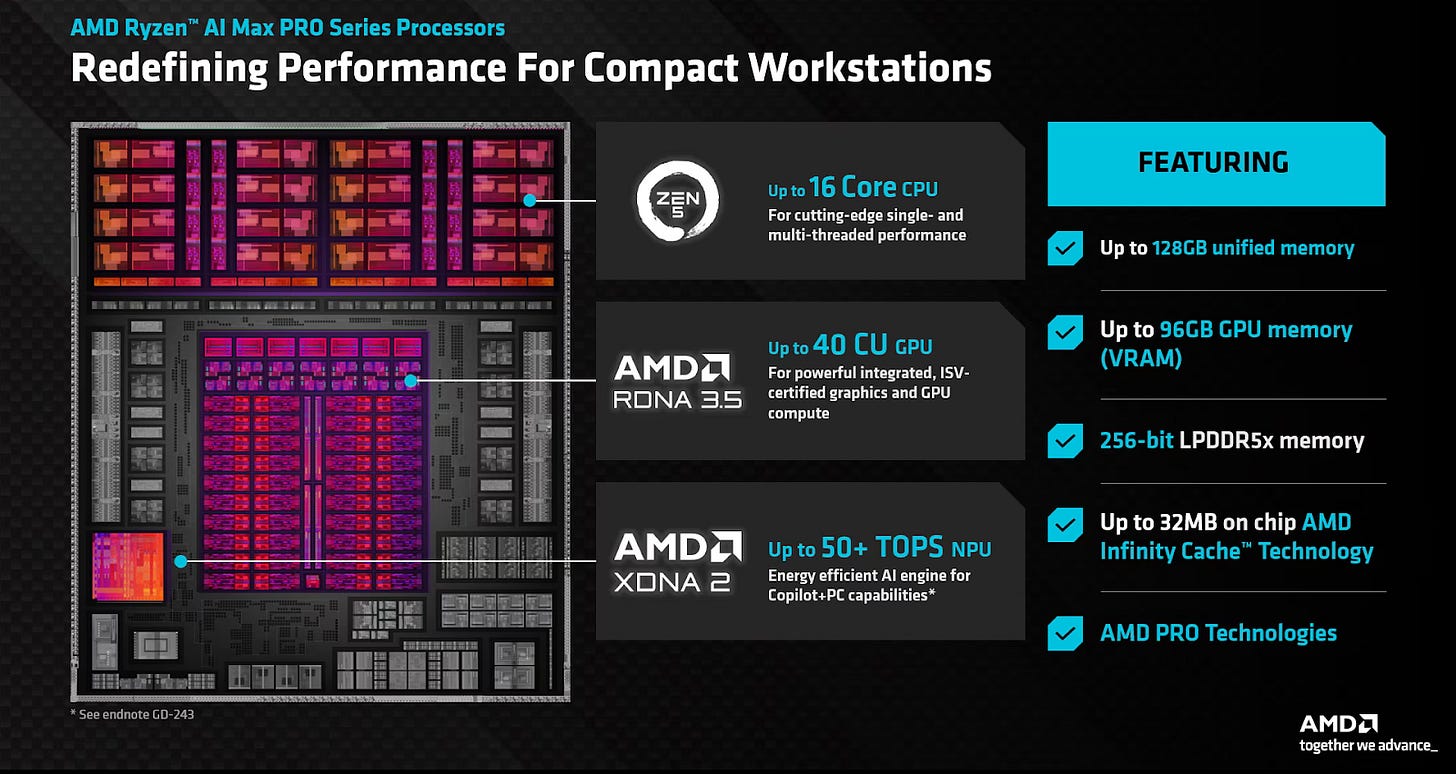

Strix Halo is the codename for AMD’s highest end mobile chip, which is used in the Ryzen AI MAX series. It combines a powerful CPU with 16 Zen 5 cores and a large GPU with 20 RDNA 3.5 Workgroup Processors (WGPs). The sizeable iGPU makes Strix Halo particularly interesting because GPUs have high bandwidth requirements. Strix Halo tackles that with a 256-bit LPDDR5X-8000 setup combined with 32 MB of memory side cache. The latter is often referred to as Infinity Cache, or MALL (Memory Attached Last Level). I’ll refer to it as Infinity Cache for brevity.

Infinity Cache has been around since RDNA2 in AMD’s discrete consumer GPU lineup, where it helped AMD hit high performance targets with lower DRAM bandwidth requirements. However, Infinity Cache’s efficacy has so far been difficult for me to evaluate. AMD’s discrete GPUs have performance monitoring facilities accessible through AMD’s developer tools. But those tools stop providing information past L2. Strix Halo stands out because it has an Infinity Cache implementation, and all the accessible performance monitoring features typical of a recent AMD GPU. That includes programmable performance counters at Infinity Fabric and memory controllers. It’s an opportunity to finally get insight into how well AMD’s Infinity Cache does its job in various graphics workloads.

Special thanks goes out to ASUS for sampling their ROG Flow Z13. This device implements AMD’s Ryzen AI MAX+ 395 with 32 GB of LPDDR5X in a thin and light form factor. It superficially represents a convertible tablet from Microsoft’s Surface line, and is remarkably portable for a device with gaming credentials. Without ASUS’s help, this article wouldn’t have been possible.

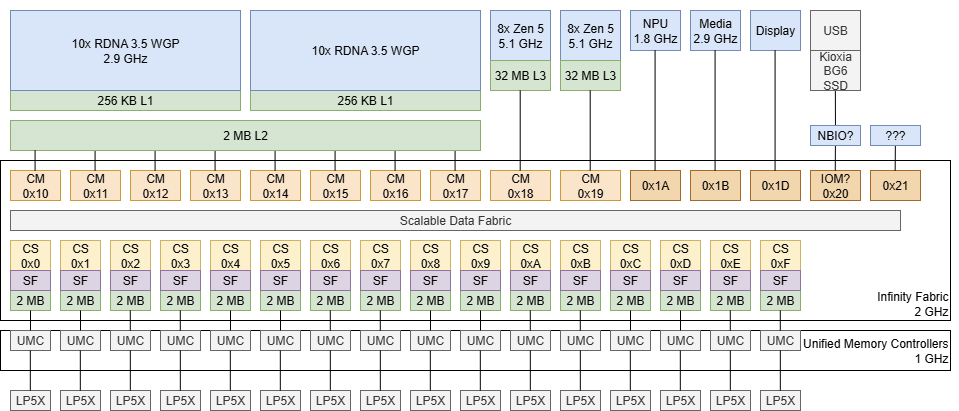

AMD’s Infinity Fabric aims to abstract away details of how data travels across the chip. It does so by providing endpoints with well defined interfaces to let blocks make or handle memory requests. Infinity Fabric also provides a set of programmable performance counters. AMD documents a single DATA_BW performance event that counts data beats at endpoints. DATA_BW targets an endpoint via its 8-bit instance ID, and can count either reads or writes. AMD never documented Infinity Fabric instance IDs for Strix Halo. So, I did some guessing by generating traffic at various blocks and observing bandwidth counts at all possible instance IDs.

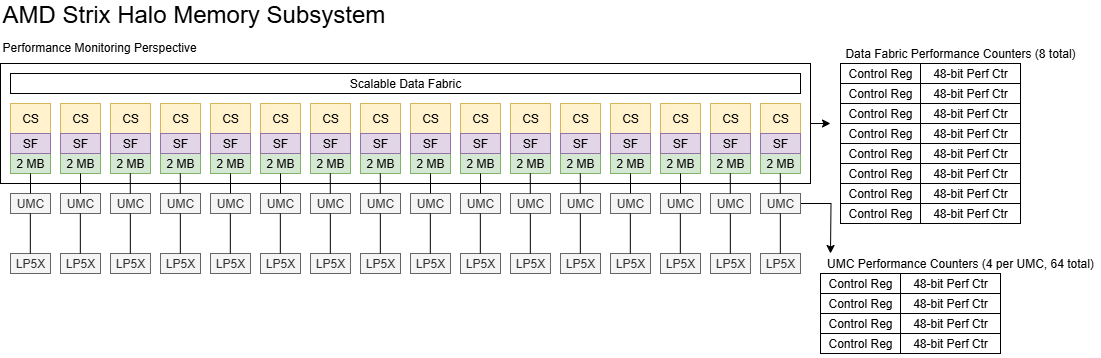

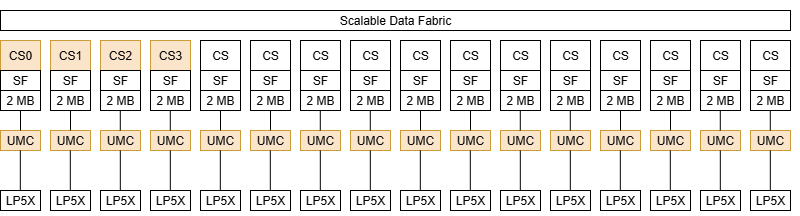

Instance IDs start from the Coherent Stations (CS-es), just like on server platforms. CS blocks sit in front of memory controllers and ensure cache coherency by probing another block if it might have a modified copy of the requested cacheline. If it doesn’t, which is most of the time, the CS will pass the request on to its attached Unified Memory Controller (UMC). Because CS blocks observe all requests to physical memory backed by DRAM, it’s a logical place to implement a memory side cache. That’s exactly what AMD does on chips with Infinity Cache. Cache hits let the CS avoid going to the UMC.

Strix Halo has 16 memory controllers and CS instances, each handling a 16-bit LPDDR5X channel. The GPU occupies the next eight instance IDs, suggesting it has a wide interface to Infinity Fabric. CPU core clusters come next. Each octa-core Zen 5 Core Complex (CCX) connects to Infinity Fabric via one endpoint. Miscellaneous blocks follow. These include the NPU, media engine, the display engine, and a mystery.

Cache misses usually cause a request to the next level in the memory hierarchy, while cache hits do not. Therefore, my idea is to compare traffic levels at the CS and UMC levels. Traffic that shows up at the CS but not at the UMCs can be used as a proxy for Infinity Cache hits. There are a few problems with that approach though.

First, Strix Halo only provides eight Infinity Fabric performance counters. I have to use two counters per endpoint to count both read and write data beats, so I can only monitor four CS-es simultaneously. Memory traffic is interleaved across channels, so taking bandwidth observed at four CS-es and multiplying by four should give a reasonably accurate estimate of overall bandwidth. But interleaving isn’t perfectly even in practice, so I expect a few percentage points of error. The UMC situation is easier. Each UMC has its own set of four performance counters, letting me monitor all of them at the same time. I’m using one counter per UMC to count Column Address Strobe (CAS) commands. Other available UMC events allow monitoring memory controller frequency, bus utilization, and ACTIVATE or PRECHARGE commands. But that stuff goes beyond what I want to focus on here, and collecting more data points would make for annoyingly large spreadsheets.

Cross-CCX traffic presents a second source of error as mentioned above. If a CS sends a probe that hits a modified line, it won’t send a request to the UMC. The request is still being satisfied out of cache, just not the Infinity Cache that I’m interested in. I expect this to be rare because cross-CCX traffic in general is usually low compared to requests satisfied from DRAM. It’ll be especially rare in the graphics workloads I’m targeting here because Strix Halo’s second CCD tends to be parked in Windows.

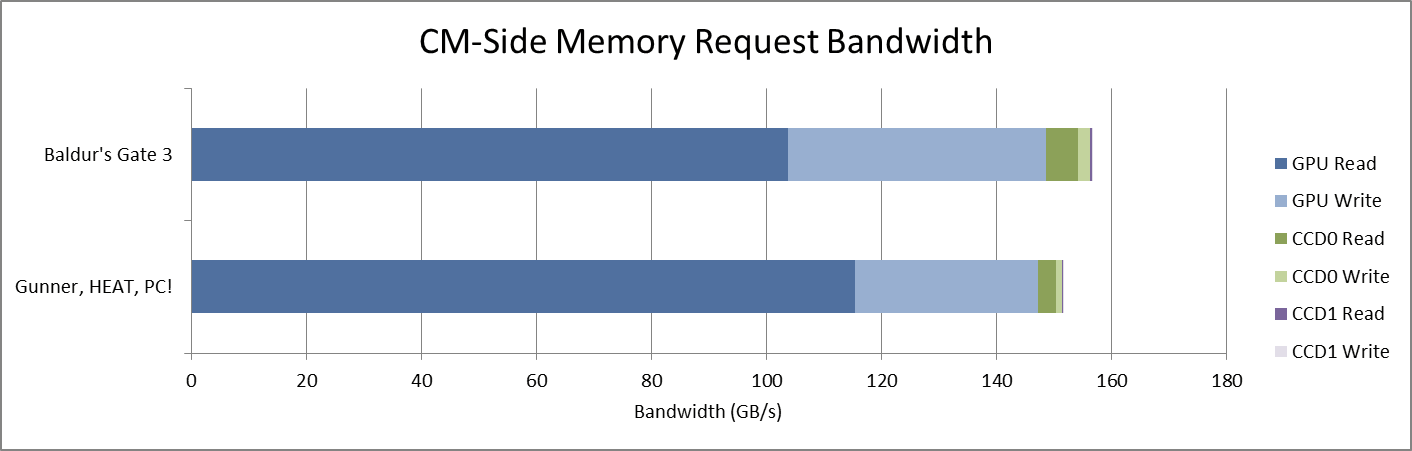

CPU-side traffic in general presents a more significant issue. Strix Halo’s Infinity Cache narrowly targets the GPU, and only GPU-side memory requests cause cache fills. CPU memory accesses will be counted as misses in a hitrate calculation. That may be technically correct, but misses the point of Infinity Cache. Most memory traffic comes from the GPU, but there’s enough CPU-side traffic to create a bit more of an error bar than I’d like. Therefore, I want to focus on whether Infinity Cache is handling enough traffic to avoid hitting DRAM bandwidth bottlenecks.

A final limitation is that I’m sampling performance counters with a tool I wrote years ago. I wrote it so I could look at hardware performance stats just like how I like looking at Task Manager or other monitoring utilities to see how my system is doing. Because I’m updating a graphical interface, I sample data every second and thus have lower resolution compared to some vendor tools. Those can often sample at millisecond granularity or better. That opens the window to underestimating bandwidth demands if a bandwidth spike only occurs over a small fraction of a second. In theory I could write another tool. But Chips and Cheese is an unpaid hobby project that has to be balanced with a separate full time job as well as other free time interests. Quickly rigging an existing project to do my bidding makes more efficient use of time, because there’s never enough time to do everything I want.

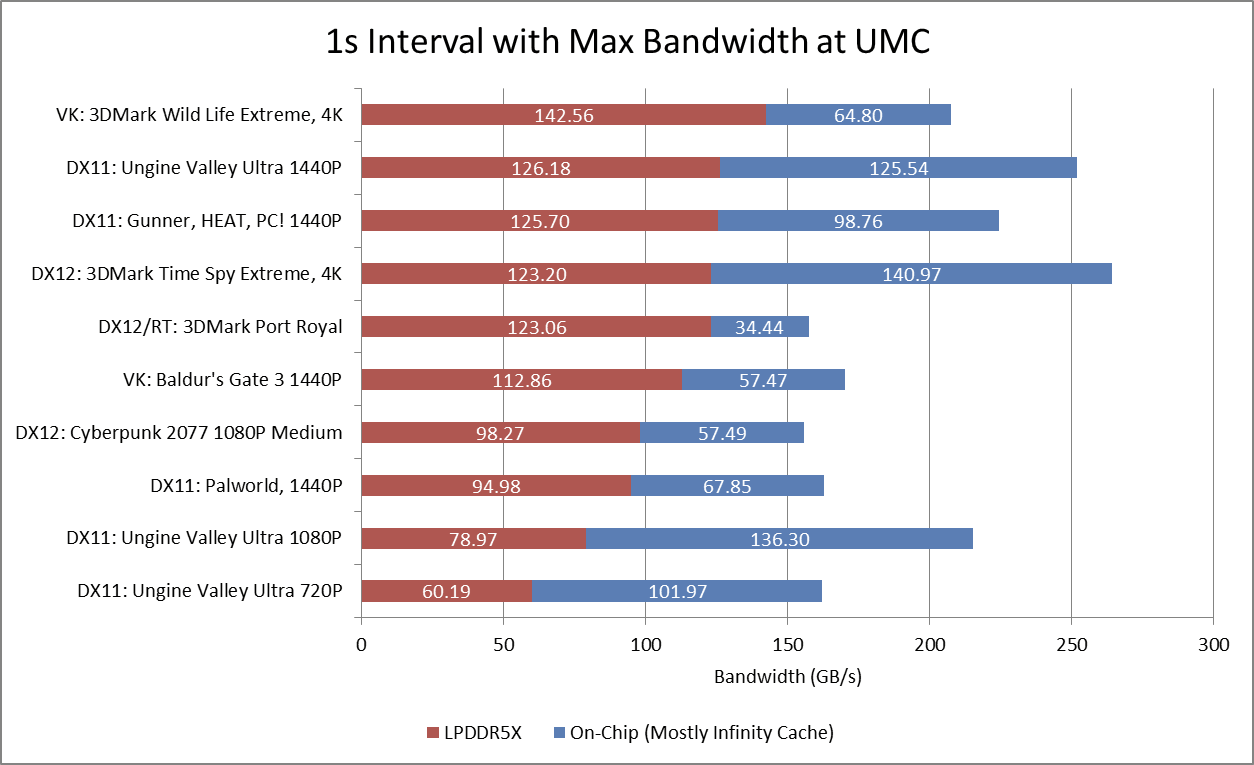

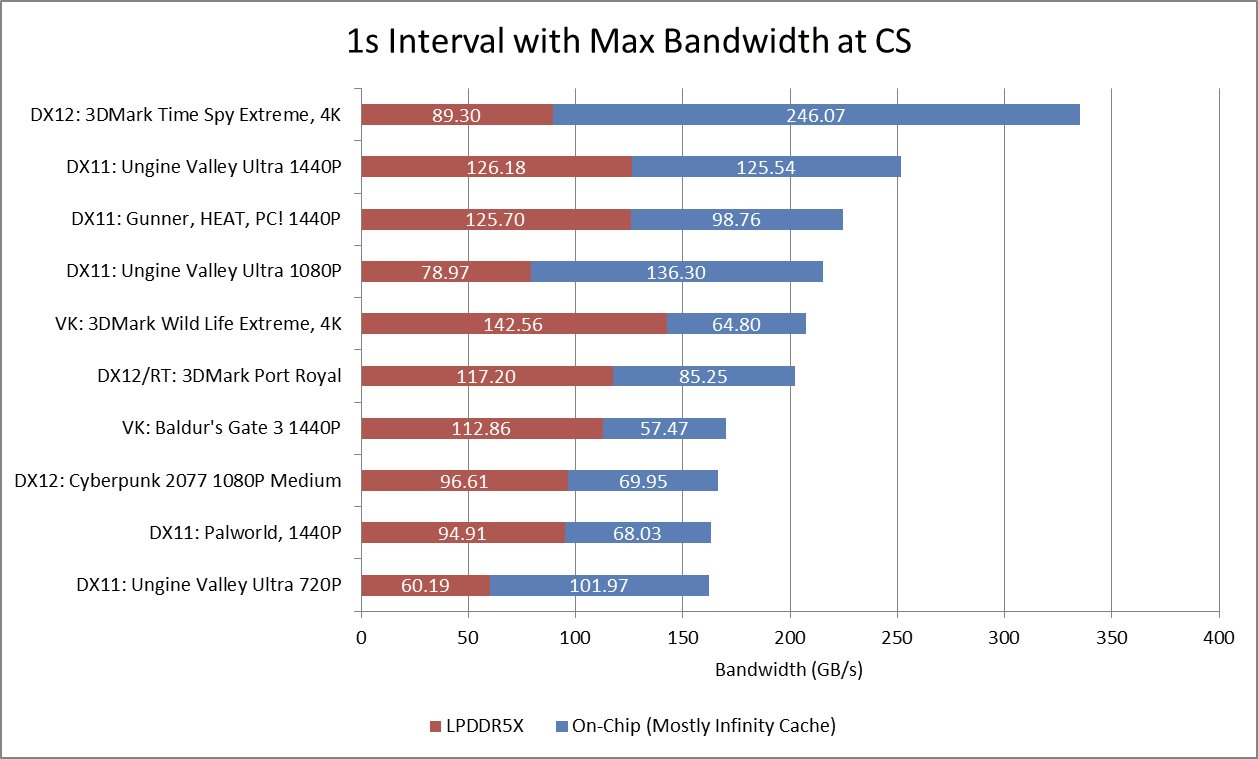

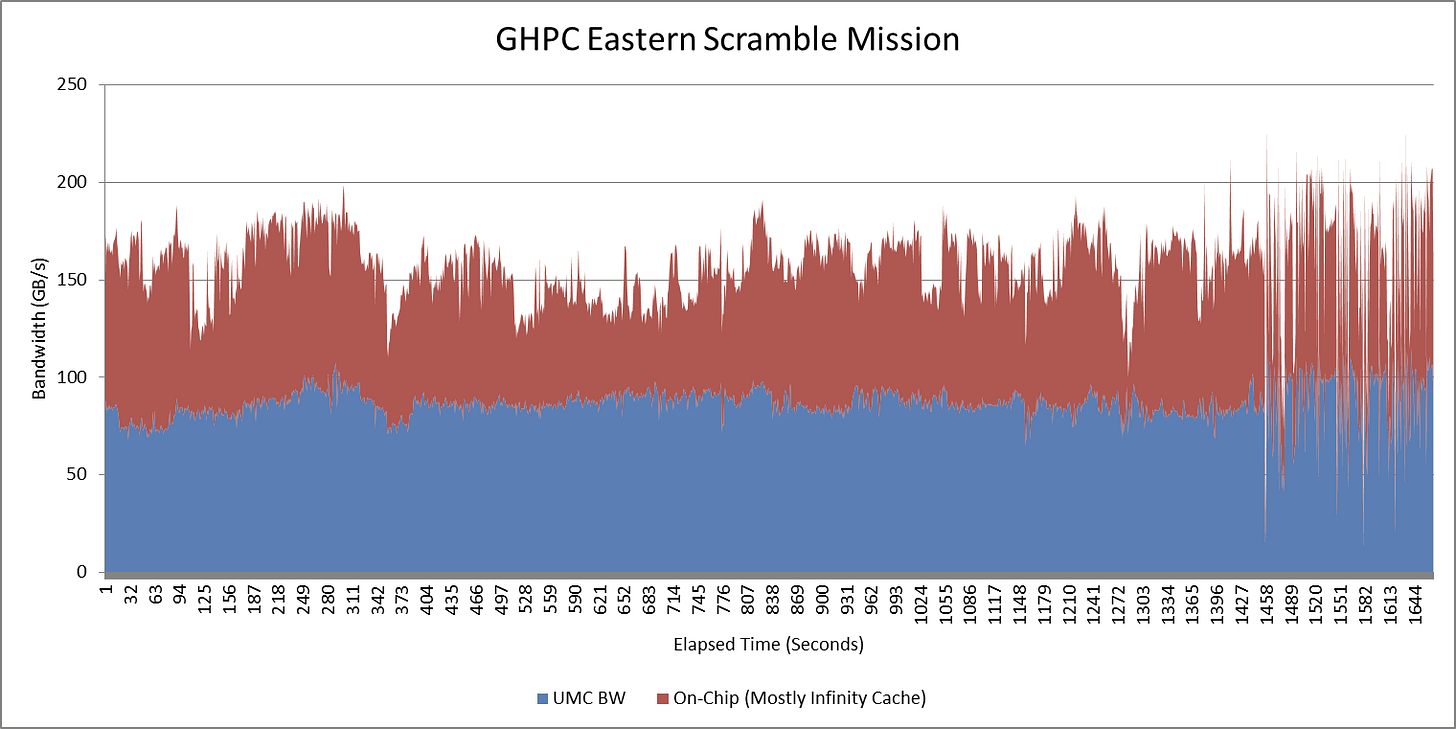

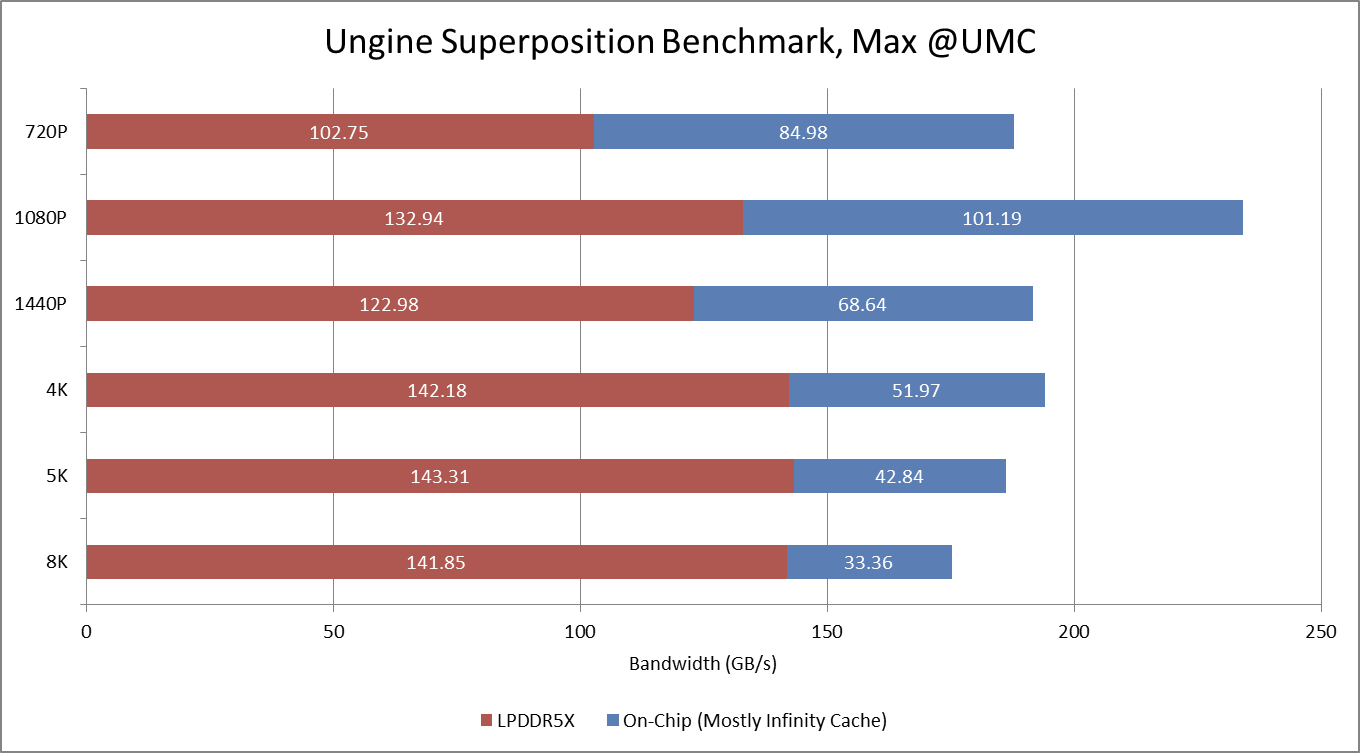

I logged data over some arbitrarily selected graphics workloads, then selected the 1-second interval with the highest DRAM bandwidth usage. That should give an idea of whether Strix Halo comes close to reaching DRAM bandwidth limits. Overall, the 32 MB cache seems to do its job. Strix Halo is able to stay well clear of the 256 GB/s theoretical bandwidth limit that its LPDDR5X-8000 setup can deliver.

Approximate CS-side bandwidth demands do indicate several workloads can push uncomfortably close to 256 GB/s. A workload doesn’t necessarily have to get right up to bandwidth limits for memory-related performance issues to start cropping up. Under high bandwidth demand, requests can start to pile up in various queues and end-to-end memory latency can increase. GHPC and Ungine Valley fall into that category. 3DMark Time Spy Extreme would definitely be DRAM bandwidth bound without the memory side cache.

Picking an interval with maximum bandwidth demands at the CS gives an idea of how much bandwidth Strix Halo’s GPU can demand. Strix Halo has the same 2 MB of graphics L2 cache that AMD’s older 7000 series “Phoenix” mobile chip had, despite more than doubling GPU compute throughput. Unsurprisingly, the GPU can draw a lot of bandwidth across Infinity Fabric. 3DMark Time Spy again stands out. If AMD wanted to simply scale up DRAM bandwidth without spending die area on cache, they would need well over 335 GB/s from DRAM.

Curiously, Digital Foundry notes that Strix Halo has very similar graphics performance to the PS5. The PS5 uses a 256-bit, 14 GT/s GDDR6 setup that’s good for 448 GB/s of theoretical bandwidth, and doesn’t have a memory side cache like Strix Halo. 448 GB/s looks just about adequate for satisfying Time Spy Extreme’s bandwidth needs, if just barely. If Strix Halo didn’t need to work in power constrained mobile devices, and didn’t need high memory capacity for multitasking, perhaps AMD could have considered a GDDR6 setup. To bring things back around to Infinity Cache, it seems to do very well at that interval above. It captures approximately 73% of memory traffic going through Infinity Fabric, which is good even from a hitrate point of view.

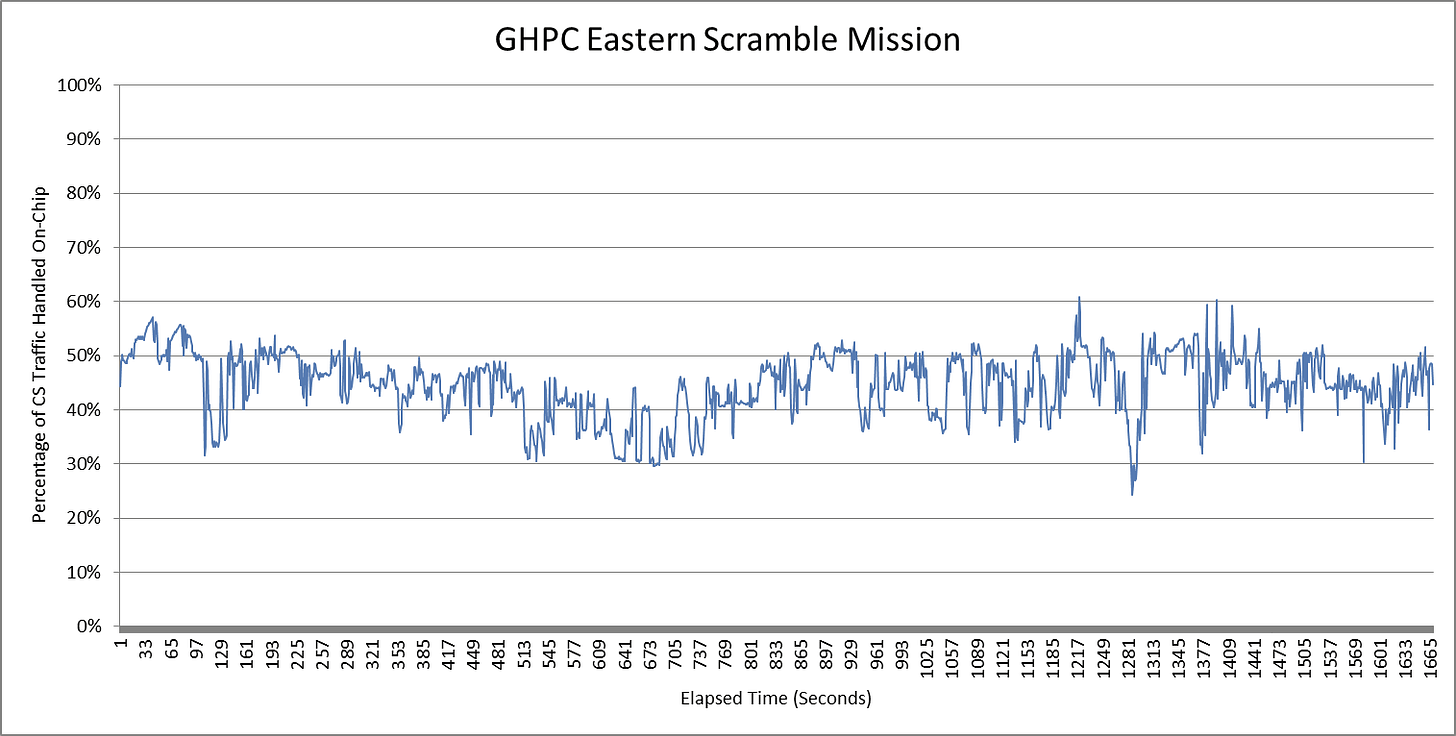

The two bar graphs above already hint at how bandwidth demands and cache hitrates vary across workloads. Those figures vary within a workload as well, though plotting all of the logged data would be excessive. Variation both across and within workloads makes it extremely difficult to summarize cache efficacy with a single hitrate figure. Plotting the percentage of traffic at the CS not observed at the UMCs as a proxy for hitrate further emphasizes that point.

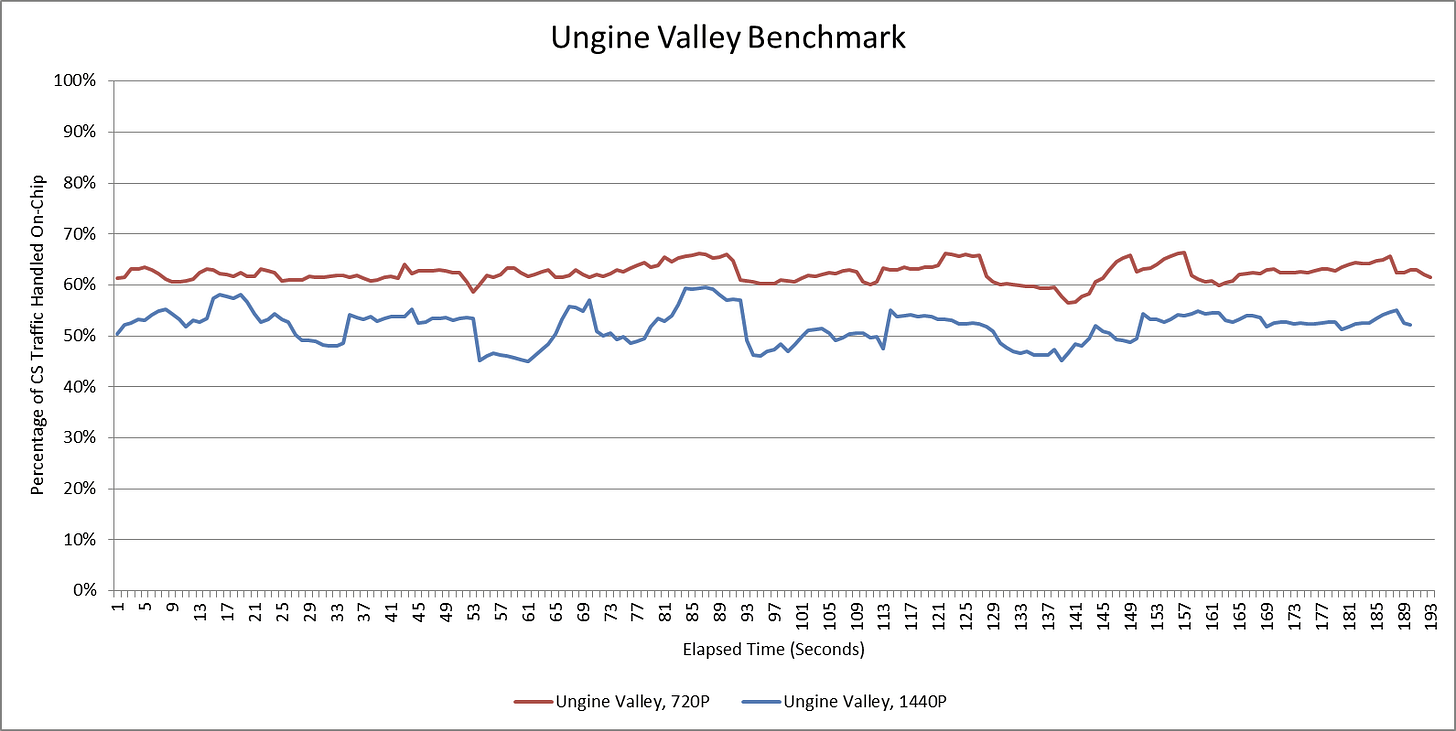

Resolution settings can impact cache hitrate as well. While I don’t have a direct look at hitrate figures, plotting with the data I do have suggests increasing resolution in the Ungine Valley benchmark tends to depress hitrate.

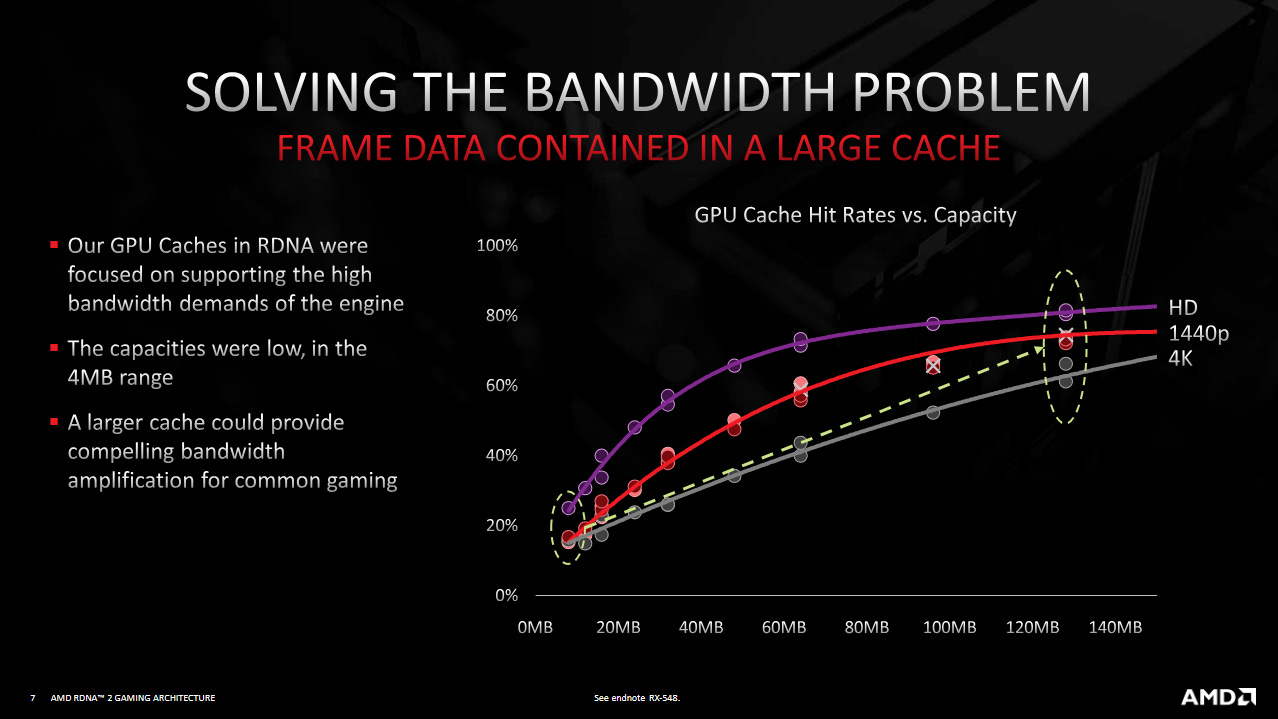

AMD presented a slide at Hot Chips 2021 with three lines for different resolutions across a set of different cache capacities. I obviously can’t test different cache capacities. But AMD’s slide does beg the question of how well a 32 MB cache can do at a wider range of resolutions, and whether bandwidth demands remain under control at resolutions higher than what AMD may have optimized the platform for.

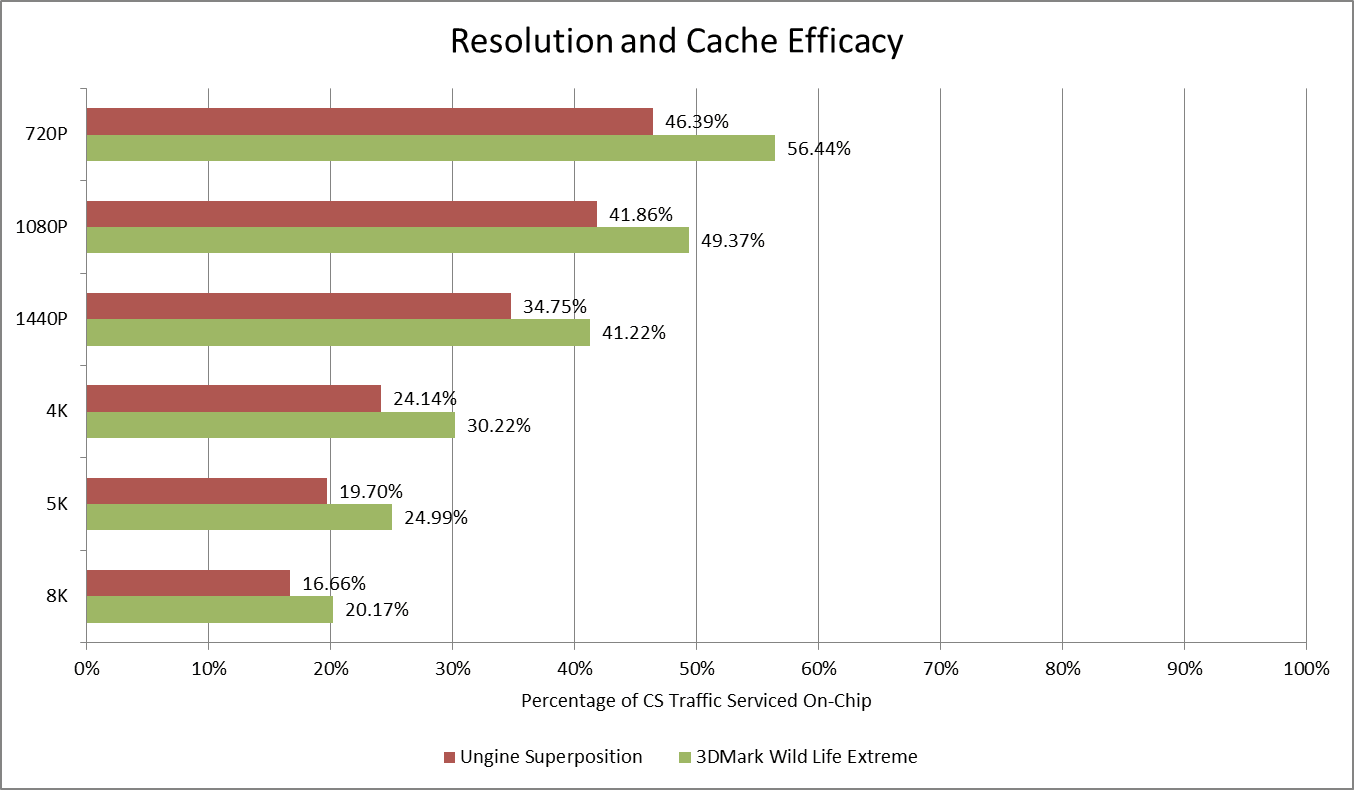

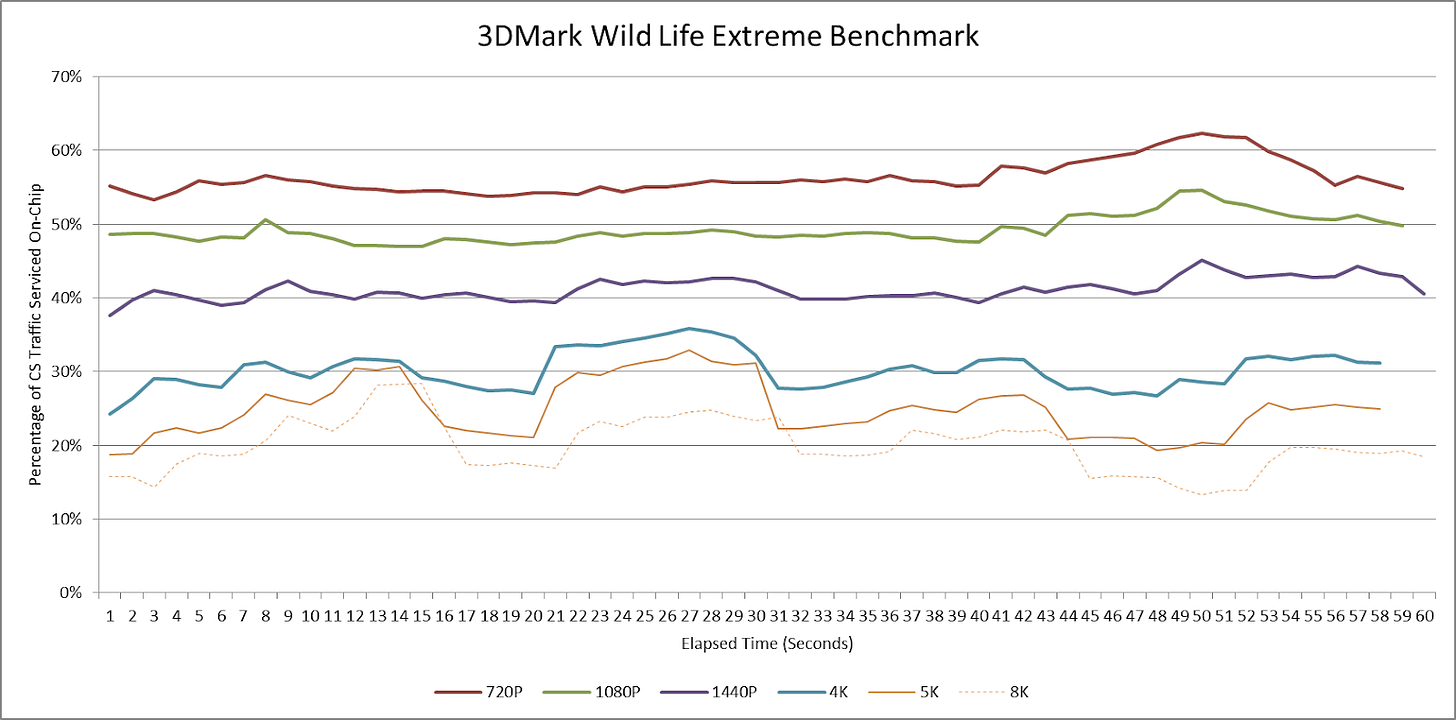

Ungine Superposition and 3DMark workloads can both target a variety of resolutions without regard to monitor resolution. Logging data throughout benchmark runs at different resolutions shows the 32 MB cache providing reasonable “bandwidth amplification” at reasonable resolutions. At unreasonable resolutions, the cache is still able to do something. But it’s not as effective as it was at lower resolutions.

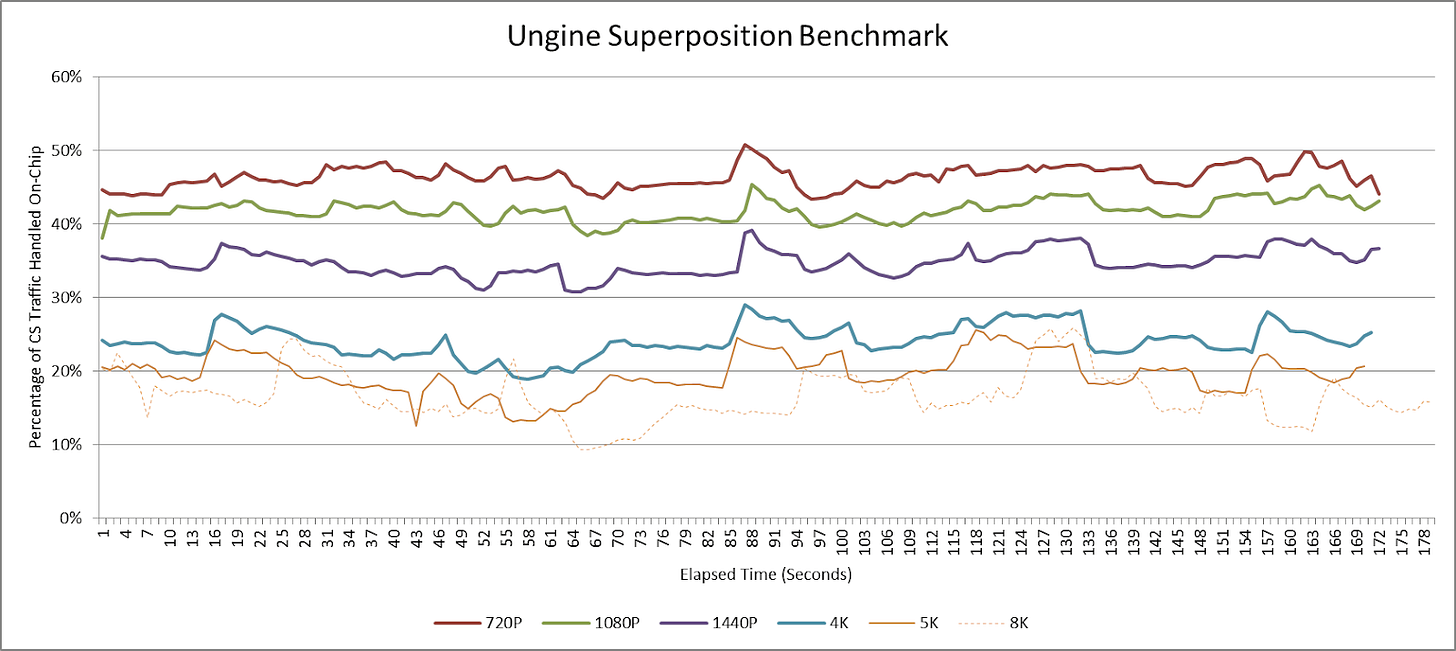

Plotting data over time shows spikes and dips in Infinity Cache efficacy occur at remarkably consistent times, even at different resolutions. 8K is an exception with the Superposition benchmark. The 8K line looks stretched out, possibly because Strix Halo’s GPU was only able to average a bit over 10 FPS, and time got slowed a bit.

If Strix Halo’s iGPU were capable of delivering over 30 FPS in Superposition, AMD would definitely need a larger cache, more DRAM bandwidth, or a combination of both. Taking a simplistic view and tripling maximum observed 8K bandwidth demands would give a figure just north of 525 GB/s. But Strix Halo’s iGPU clearly wasn’t built with such a scenario in mind, and AMD’s selected combination of cache capacity and DRAM bandwidth works well enough at all tested resolutions. While high resolutions create the most DRAM traffic, 1080P shows the heaviest bandwidth demands at the Infinity Fabric level.

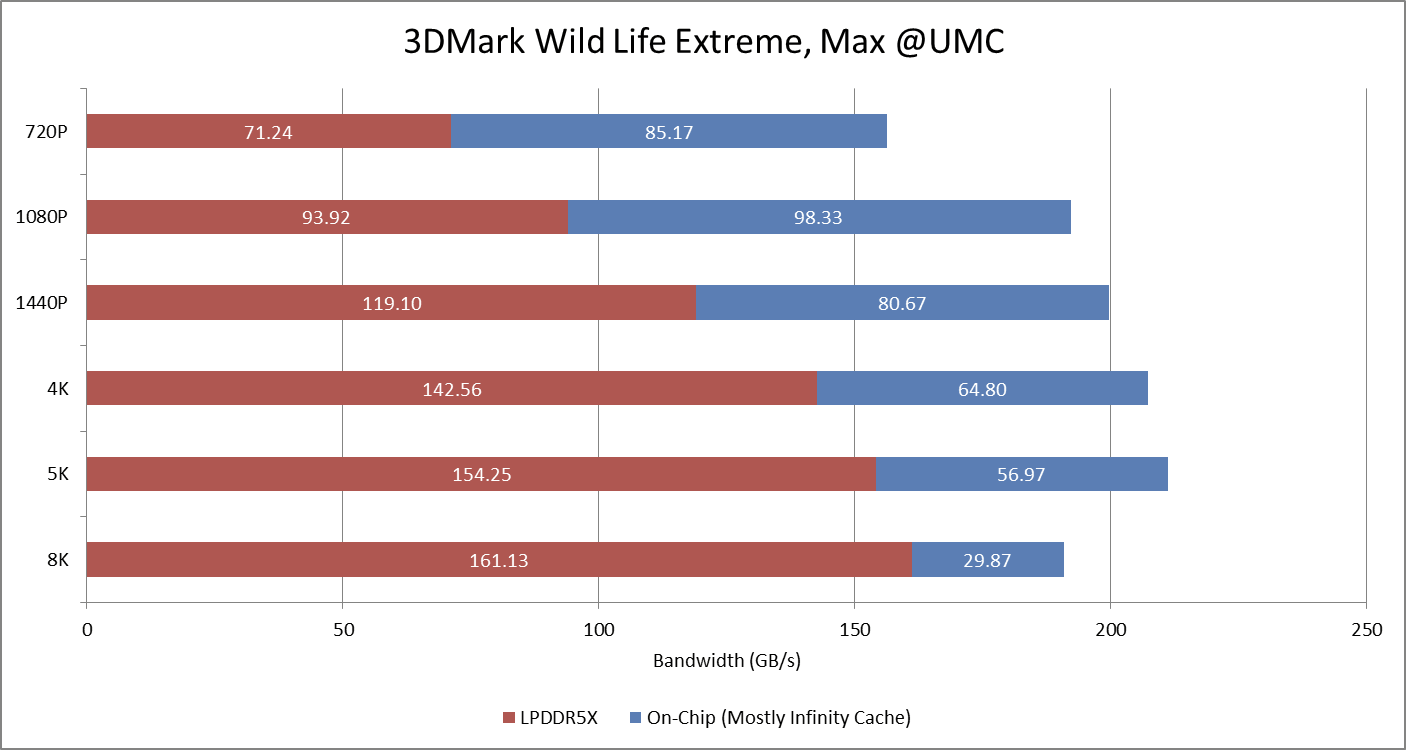

3DMark Wild Life Extreme is a better example because it’s a lightweight benchmark designed to run on mobile devices. Strix Halo’s iGPU can average above 30 FPS even when rendering at 8K. Again, DRAM bandwidth demands increase and Infinity Cache becomes less effective as resolution goes up. But Infinity Cache still does enough to keep the chip well clear of DRAM bandwidth limits. Thus the cache does its job, and the bandwidth situation remains under control across a wide range of resolutions.

Bandwidth demands are more important than hitrates. Curiously though, Wild Life Extreme experiences increasingly severe hitrate dips at higher resolutions around the 16-19 and 45-52 second intervals. Those dips barely show at 1440P or below. Perhaps a 32 MB cache’s efficacy also shows more variation as resolution increases.

A few errors here – I said Core Coherent Master read/write beats were 32B and 64B/cycle. It’s not per cycle, it’s per data beat. And I meant to say reads outnumber writes at the end.

Chip designers have to tune their designs to perform well across a wide variety of workloads. Cache sizes are one parameter on the list. AMD chose to combine a 32 MB cache with 256 GB/s of DRAM bandwidth, and it seems to do well enough across the workloads I tested. Monitoring at the CS-es and UMCs also supports AMD’s data showing that higher resolutions tend to depress hitrate. Those results explain why larger GPUs tend to have larger caches and higher DRAM bandwidth.

At a higher level, GPU bandwidth demands have been a persistent challenge for large iGPUs and have driven a diverse set of solutions. Over a decade ago, Intel created “halo” iGPU variants and paired a 128 MB eDRAM cache while sticking with a typical 128-bit client memory bus. AMD’s console chips use large and fast DRAM buses. The PS5 is one example. Strix Halo does a bit of both. It combines modest cache capacity with a more DRAM bandwidth than typical client chips, but doesn’t rely as much on DRAM bandwidth as console chips.

Those varied approaches to the bandwidth problem are fascinating. Watching how Infinity Cache behaves in various graphics workloads has been fascinating as well. But everything would be so much more fun if AMD’s tools provided direct data on Infinity Cache hitrates. That applies to both integrated and discrete GPUs. Infinity Cache is a well established part of AMD’s strategy. It has been around for several generations, and now has a presence in AMD’s mobile lineup too. I suspect developers would love to see Infinity Cache hitrates in addition to the data on GPU-private caches that AMD’s current tools show.

If you like the content then consider heading over to the Patreon or PayPal if you want to toss a few bucks to Chips and Cheese. Also consider joining the Discord.

{💬|⚡|🔥} {What do you think?|Share your opinion below!|Tell us your thoughts in comments!}

#️⃣ #Evaluating #Infinity #Cache #AMD #Strix #Halo

🕒 Posted on 1761107929